AI-driven innovation and patient privacy.

AI‑driven innovation and patient privacy capture the tension between technological advancement and the ethical responsibility to protect sensitive health information. As AI transforms diagnostics, care delivery, and operational efficiency, safeguarding patient data becomes not just a compliance issue but a core pillar of trust in modern healthcare.

enoma ojo (2024)

1/4/20264 min read

U.S. Department of Health and Human Services. (2019), In its report “Privacy and health research in a data-driven world”, it highlighted the urgency to rethink privacy as a shared, evolving responsibility, in the age of big data health research. This report warned about the risks, and it reframes privacy as a strategic pillar for ethical innovation. How large-scale data, from electronic health records to wearable devices, can revolutionize care delivery, diagnostics, and public health. But it also emphasizes that without robust governance, informed consent, and privacy-preserving technologies, this progress risks eroding public trust and exposing individuals to harm. Every connected device, from smart thermostats to industrial sensors, becomes a potential entry point for cyberattacks. The more devices in the network, the more vulnerabilities exist. Many IoT devices lack robust security protocols, making them easy targets for hackers who can exploit outdated firmware, weak authentication, or insecure default settings

The healthcare industry is undergoing a seismic shift, driven by the rise of AI and data analytics. These technologies offer unprecedented potential for personalized medicine, predictive diagnostics, and operational efficiency. However, this transformation comes with a critical challenge: safeguarding patient privacy. This article underscores that while data is the lifeblood of innovation, its misuse or mishandling can erode public trust and compromise ethical standards. AI tools like DAX and Abridge streamline clinical documentation and diagnostics, but they operate on a pay-per-use model. These costs are often not reimbursed by insurers, meaning providers must absorb them or increase patient volume to stay financially viable. This creates a paradox: while AI promises efficiency, it can strain budgets and compromise care quality if not strategically managed. Many healthcare institutions still rely on outdated IT infrastructure that’s incompatible with modern data-sharing protocols. Upgrading these systems to meet privacy and interoperability standards demands significant capital investment, often without immediate ROI. Fragmented data silos, exacerbated by privacy regulations, further drive up costs by impeding seamless care coordination.

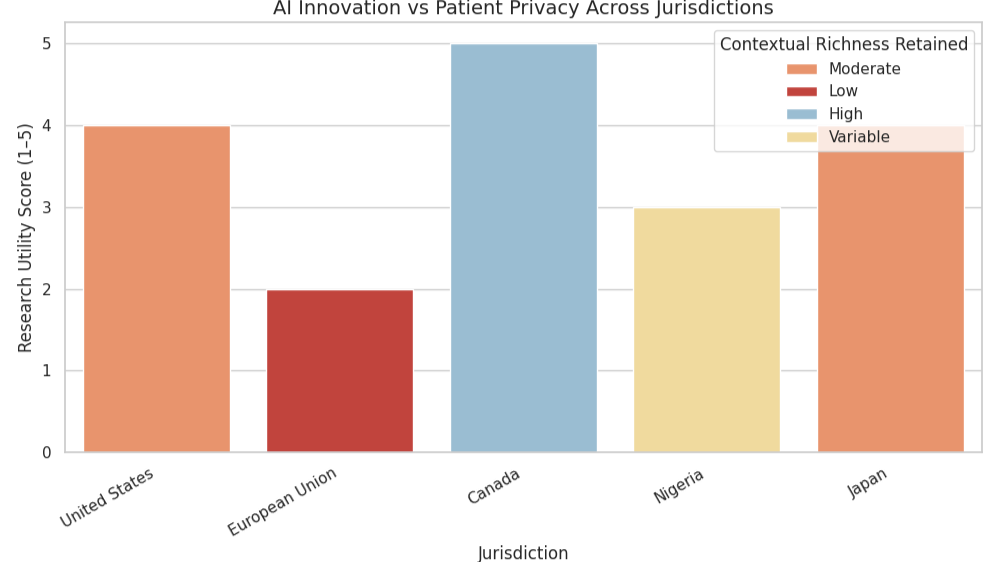

The hidden costs of data-driven healthcare aren’t just financial; they’re systemic. From legacy infrastructure to legal risk, privacy must evolve from a compliance checkbox to a strategic pillar. Let’s build smarter, safer systems. The global landscape of data governance is marked by a fragmented regulatory patchwork, particularly around consent and de-identification. While some jurisdictions embrace broad consent, allowing personal data to be repurposed for future research, others enforce rigid anonymization protocols that strip datasets of identifiable markers. This divergence reflects deeper philosophical tensions: one side prioritizes scientific progress and flexibility, while the other emphasizes individual autonomy and risk mitigation. The result is a complex compliance maze that researchers must navigate, often at the expense of cross-border collaboration and longitudinal studies.

Although these protective measures are well-intentioned, they frequently undermine the very insights that data-driven research seeks to uncover. Strict anonymization, for instance, may erase critical contextual variables such as geographic location, socioeconomic status, or health history, factors essential for understanding disparities, predicting outcomes, or tailoring interventions. This trade-off between privacy and utility is not merely technical; it’s ethical. When data loses its richness, the populations it represents risk being mischaracterized or excluded from evidence-based policymaking. The challenge, then, is to preserve individual rights without sacrificing analytical depth. Rather than defaulting to increasingly rigid rules, this article advocates for smarter, adaptive governance frameworks. These would incorporate dynamic consent models, tiered access protocols, and privacy-preserving technologies like differential privacy or federated learning. Such approaches allow for nuanced data use while maintaining trust and transparency. Importantly, they shift the conversation from restriction to stewardship, empowering institutions to act responsibly without stifling innovation. In a world where data is both a public good and a private asset, regulatory evolution must reflect the complexity of that dual role.

To navigate this tension, startups like Hero AI and SymetryML are pioneering privacy-preserving technologies. Hero AI focuses on clinical automation in Canada, while SymetryML in the U.S. leverages federated AI, an approach that enables collaborative learning across institutions without moving sensitive data. These models demonstrate that innovation and privacy are not mutually exclusive; rather, they can be harmonized through thoughtful design and governance. The absence of unified global standards for data sharing, AI governance, and ethical oversight creates friction and uncertainty. This gap not only slows innovation but also opens the door to data commodification and exploitation. The author calls for a coordinated effort to build frameworks that prioritize fairness, transparency, and accountability.

Ultimately, this article serves as a compelling call to action: healthcare leaders, technologists, and policymakers must move beyond siloed innovation and toward a shared vision of ethical digital transformation. The stakes are no longer theoretical; data-driven healthcare is reshaping clinical workflows, patient relationships, and institutional accountability. Without intentional collaboration, we risk building systems that prioritize efficiency over empathy and analytics over equity. Protecting human dignity in this new era requires more than compliance; it demands a cultural shift toward transparency, inclusivity, and long-term stewardship. By embracing privacy-preserving technologies such as federated AI, differential privacy, and zero-trust architectures, the industry can unlock transformative potential without compromising public trust. These innovations offer a pathway to scale research, improve diagnostics, and personalize care, while safeguarding sensitive data and respecting individual autonomy. But technology alone is not enough. Robust governance frameworks must be embedded into every layer of digital health infrastructure, ensuring that ethical principles are not just aspirational but operational. This means rethinking procurement, regulation, and even reimbursement models to align incentives with integrity.

© 2025 Enoma Ojo. All rights reserved.